AI voices have come a long way. They’re smoother, more expressive, and sometimes, eerily close to humans. But even the best ones still feel a little off. Something in the tone, pacing, or rhythm doesn’t sit right.

Ever listened to an AI voice and felt like it was too robotic? Or maybe too polished in a way that doesn’t feel real? That’s not a coincidence. AI-generated speech has built-in quirks that make it sound unnatural, no matter how advanced the technology gets.

But why? What makes AI voices sound strange even when they’re reading a well-written script? And more importantly—how can you make them sound better?

Let’s break it down.

What Makes a Voice Sound “Human” (And Why AI Struggles)

Think about the way people speak. It’s never perfectly smooth. There are pauses, hesitations, shifts in tone. Some words stretch out for emphasis, others get cut short. Conversations have rhythm—rising and falling like a wave.

Now, compare that to AI-generated speech. It follows a script exactly as it’s written. Every word gets the same level of attention. The pacing is often too consistent, almost metronomic. There’s no real instinct behind it, no subconscious shifts based on emotion or context.

A human voice can switch from casual to serious in an instant. It can add sarcasm, warmth, excitement—all without a second thought. AI, on the other hand, has to be programmed for each of these things. If the model wasn’t trained to recognize certain tonal shifts, they don’t exist.

And then there’s the biggest challenge: emotion.

Emotion is the biggest struggle, and even if AI voices can sound happy, sad, or dramatic, the effect often feels staged. That’s because real emotion is a mix of tiny, unpredictable vocal cues. A nervous voice might speed up slightly. A confident voice might slow down and lean into certain words. AI doesn’t naturally do any of this. It can mimic emotion, but it doesn’t feel anything, so the delivery lacks authenticity.

Some AI models are getting better at adding variation. But even with those, something still feels off. That’s where the uncanny valley effect comes in.

The Uncanny Valley Effect in AI Speech

Ever seen a digital character that looks almost real—but not quite? That unsettling feeling is called the uncanny valley. AI-generated voices have the same problem.

When a voice is obviously robotic, your brain accepts it for what it is. There’s no expectation of human-like emotion. But when a voice gets close to sounding real, every tiny mistake becomes more noticeable. A pause that’s half a second too long, a sentence that’s too perfectly structured, a moment where the tone shifts at the wrong time—these things pull you out of the experience.

That’s why some AI voices feel creepier than the robotic ones. They hover in an uncomfortable space between humans and machines. The smoother and more polished they get, the more noticeable the weirdness becomes.

There’s another issue at play, too: AI voice training data. Most AI-generated speech is built from hours of recordings, often from a single speaker. But humans don’t sound the same all the time. Mood, energy, and even background noise can change the way we speak. AI models trained on rigid, uniform data often fail to capture the natural messiness of human conversation.

So what happens when AI voices are built from data that’s too perfect? They lack the imperfections that make speech feel real.

Technical Limitations That Make AI Voices Sound Off

Even the best AI voice models have technical roadblocks that make their speech sound unnatural. Here’s what’s happening behind the scenes:

1. Lack of Real-Time Context

AI reads words in a linear way. It doesn’t truly understand meaning the way humans do. If a sentence is sarcastic, sentimental, or playful, an AI voice won’t always pick up on that. It delivers each line based on its training, not on actual comprehension.

For example, read this sentence aloud:

➡️ “Oh great, another Monday.”

Depending on the tone, that could be excitement or sarcasm. AI often picks a tone based on probability, not intent. So if it guesses wrong, the voice sounds weird—too cheerful when it should be dry, too flat when it should be animated.

2. Over-reliance on Predictive Speech Patterns

AI-generated speech is built on patterns. It learns from thousands of voice samples and follows statistical models to predict the best way to pronounce words. But speech isn’t always predictable.

In casual conversation, people break grammar rules all the time. We start sentences and don’t finish them. We add “uh” and “like” without thinking. AI sticks too closely to structure, which makes it sound rehearsed, even when it’s trying to be casual.

3. The Problem with Neutrality

Most AI voices aim for a middle ground—clear, professional, and neutral. The problem? True neutrality doesn’t exist in human speech.

Every voice has some kind of personality. A person telling a story might lean into certain words, slow down, or add energy to key moments. AI voices rarely do this unless programmed to. That’s why they often sound slightly detached, even when they’re delivering emotional content.

4. Limited Breath and Pausing Control

A human voice naturally adjusts breath and pauses based on thought. AI doesn’t breathe. It processes words in chunks, and unless the text includes proper punctuation or formatting, the result is a voice that speeds through at an unnatural pace.

This is why some AI-generated voiceovers feel like they’re rushing through sentences. Without the right breaks, everything blends together, making it harder to follow.

5. Struggles with Emphasis and Intonation

Humans instinctively emphasize certain words to shape meaning. AI struggles with this. It applies emphasis based on programmed rules, which don’t always match how a human would deliver the same sentence.

For example, read these two sentences:

➡️ “I didn’t say she stole the money.”

➡️ “I didn’t say she stole the money.”

The emphasis completely changes the meaning. AI voices often fail to handle subtle shifts like this, which makes some sentences sound off even when they’re technically correct.

How AI Voices Can Actually Improve

Now that we’ve broken down why AI speech sounds unnatural, let’s talk about solutions. Some of these are user-side fixes, while others depend on advancements in AI voice technology.

- Choosing High-Quality AI Voices: Not all AI voice models are the same. Some have smoother intonation and better natural pacing.

- Using AI-Powered Prosody Correction: Some AI tools allow adjustments for pacing, tone, and emphasis.

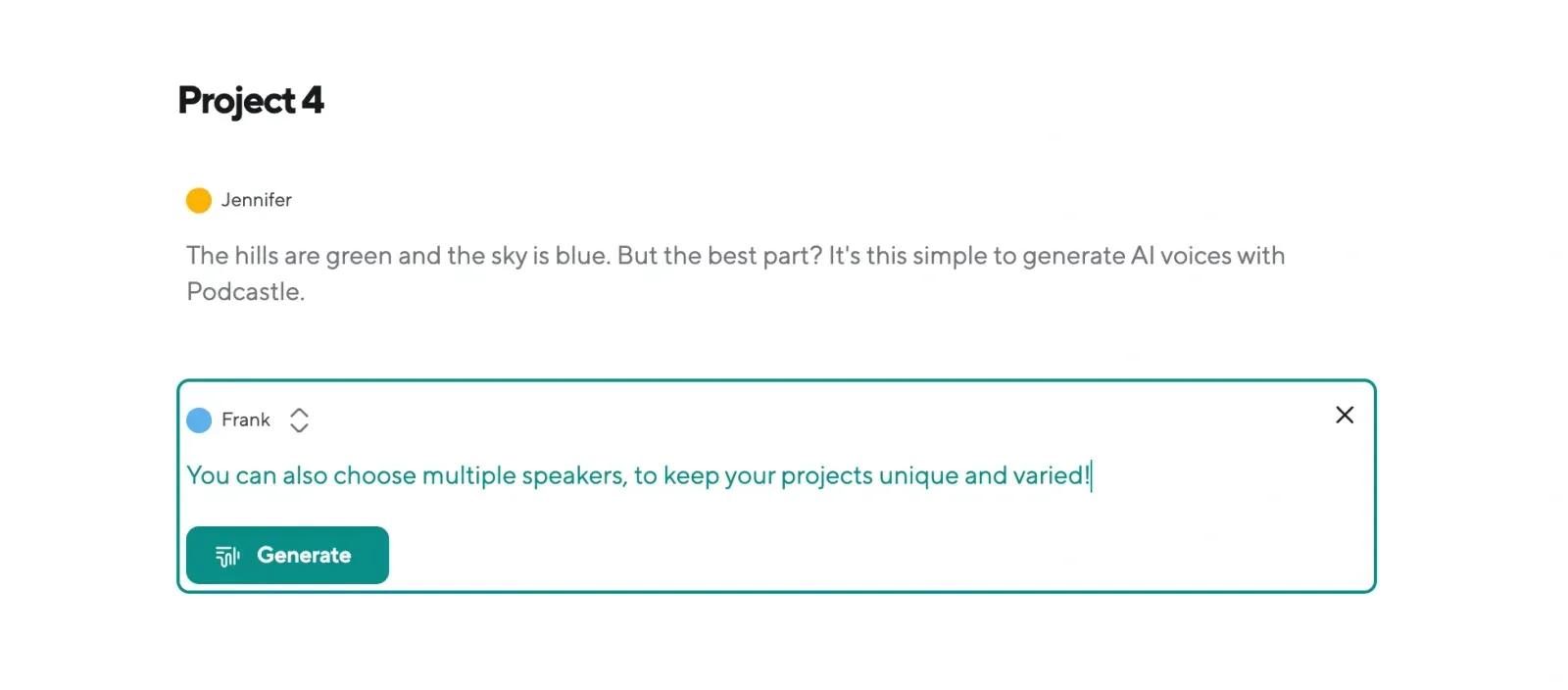

- Layering AI Voices for a More Dynamic Effect: Instead of using a single AI voice, mixing multiple can create more realistic back-and-forth dialogue.

- Customizing AI Speech Patterns: Some platforms allow fine-tuning for more expressive delivery.

The technology is improving fast. AI voices are becoming less robotic and more natural. But the key to making them work well today? Understanding where they fall short and using the right techniques to smooth things out.

How to Create AI Voices with Podcastle

Most AI voices struggle with natural speech, but Podcastle is different. Our voices are designed to sound authentic, with more expressive intonation, natural pacing, and lifelike delivery. Whether you’re creating voiceovers, narrations, or podcasts, Podcastle’s AI voices speak like real people, making your content sound professional without the robotic stiffness.

Getting started is simple:

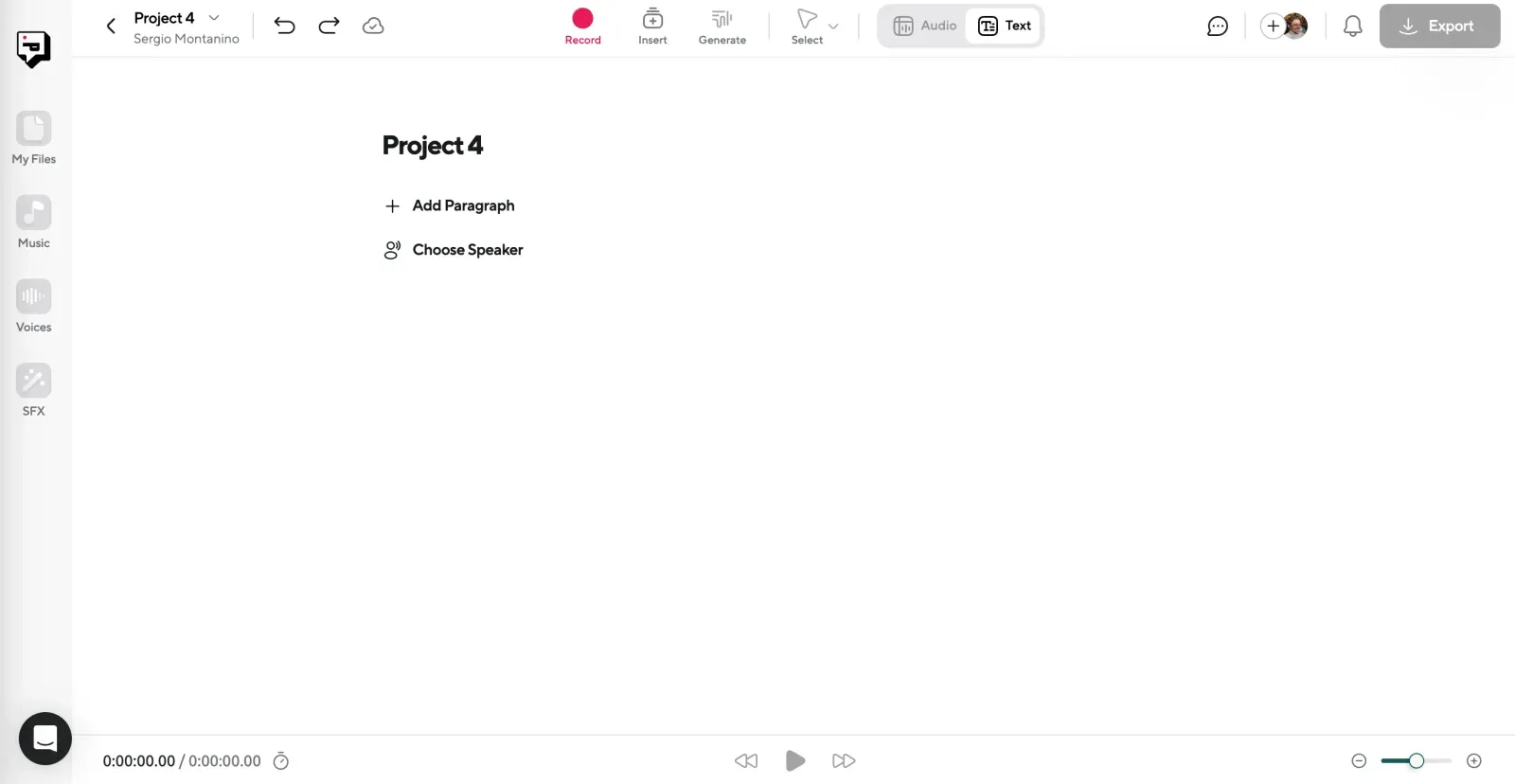

Step 1: Head to AI Voices and Create a Project

Log in to Podcastle, then navigate to AI Voices from your dashboard. Click Create a Project, and you’re ready to start.

Step 2: Pick Your Speaker and Input Your Script

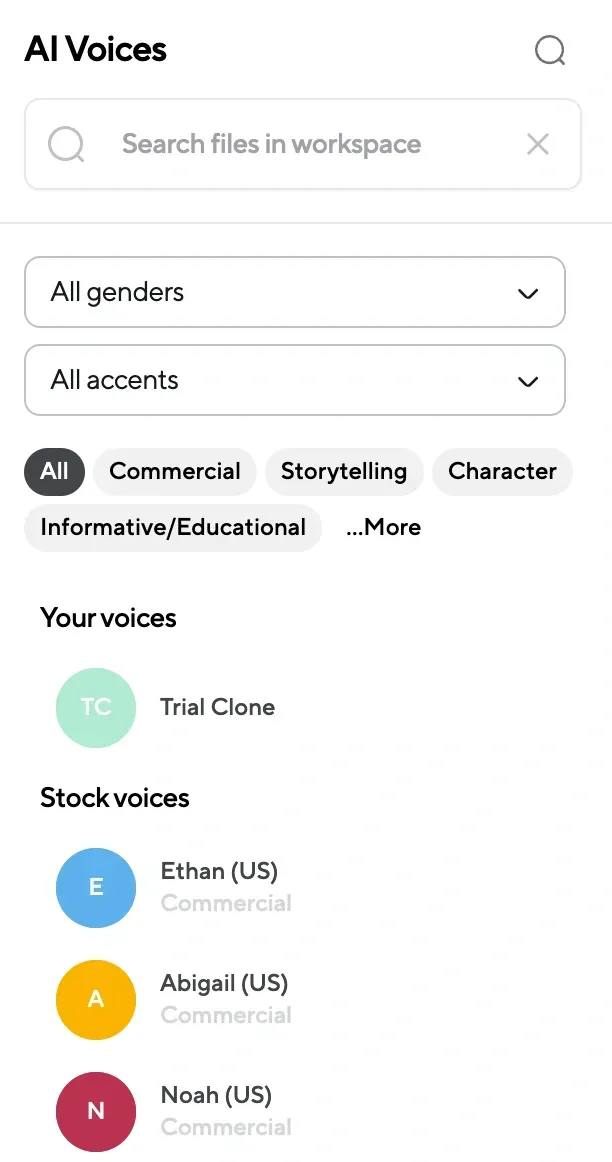

Browse our library of over 200 AI voices, each fine-tuned for different tones and styles. Want a warm, conversational voice? A polished, professional narrator? A dynamic, expressive storyteller? You’ll find the perfect match. Once you choose your voice, paste your script into the editor.

Step 3: Generate Your AI Voiceover

Click Generate, and Podcastle will transform your text into smooth, natural-sounding speech. Unlike other AI voice tools, Podcastle applies intelligent pacing and pronunciation adjustments, so your voiceover flows the way a real person would say it.

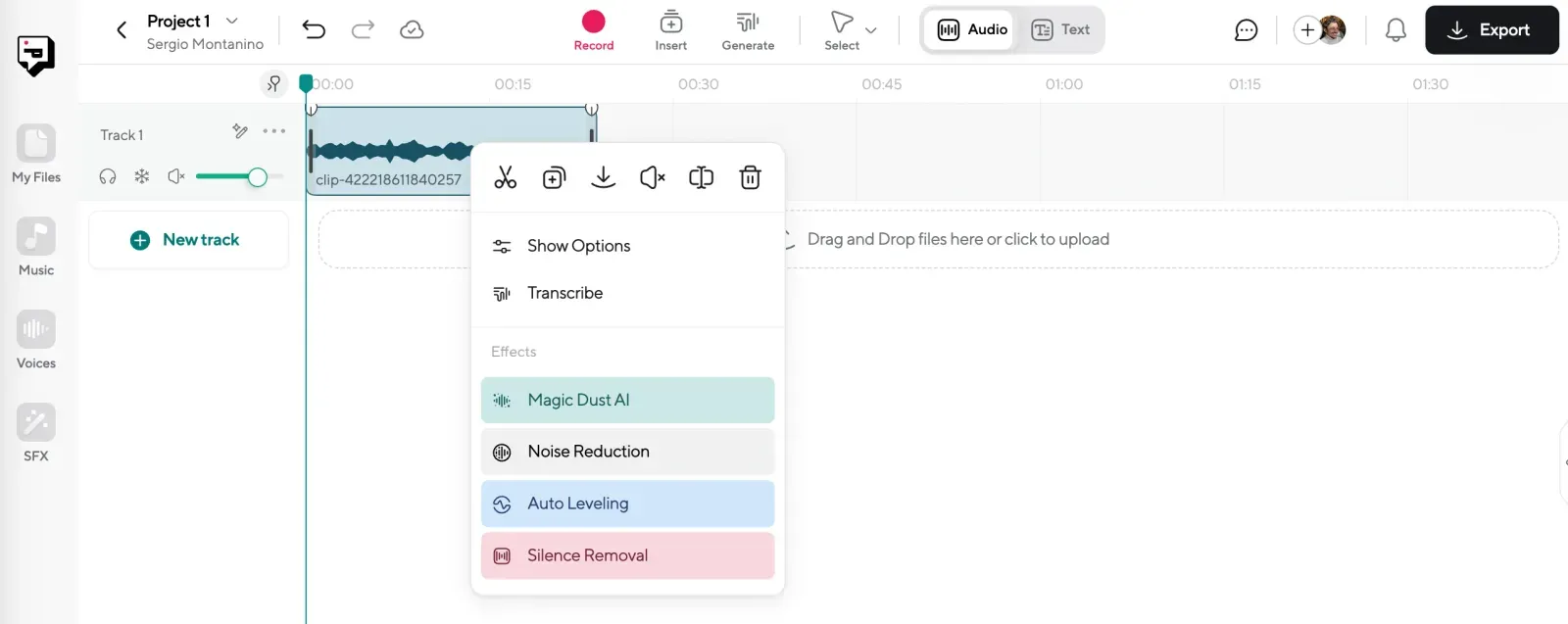

Step 4: Refine with AI Tools & Export

Need to tweak the tone or pacing? Use Podcastle’s built-in AI tools to fine-tune your voiceover. Features like Magic Dust AI for clarity enhancement, noise reduction, and auto-leveling ensure a polished, professional result. When you’re happy with the final output, export your project in the format that fits your needs.

Final Thoughts

AI voices are impressive, but they still have quirks. They can struggle with natural speech patterns, emotional depth, and the unpredictability of human conversation. Some of these problems will likely be solved as the technology advances, but others might always be part of the AI-generated experience.

The good news? With the right tools and adjustments, AI voices can sound significantly better. Choosing the right model, structuring scripts carefully, and tweaking settings can make all the difference.

The future of AI voices isn’t just about making them more human—it’s about making them work seamlessly for storytelling, content creation, and communication.

And that? That’s where things get exciting.