AI voices used to be easy to recognize. They sounded stiff and unnatural. The pacing was off. The tone lacked emotion. That’s no longer the case. AI-generated speech is polished enough to blend into podcasts and videos without raising suspicion. Some voices are so convincing that they can fool the average listener.

But no matter how advanced AI becomes, it still struggles to match the complexity of real human speech. Some flaws are obvious. Others hide in the details—the way a sentence flows, the way a word is pronounced, the way emotion shifts from one phrase to the next.

If you listen closely, the clues are there. Here’s what gives AI away.

1. Lack of Natural Breathing Patterns

A real voice moves with the rhythm of natural speech. People pause to breathe without thinking. Sometimes they take a quick inhale between thoughts. Other times they stop for a second, especially when emphasizing an important point. These pauses happen naturally, shaped by tone and pacing.

AI voices struggle with this. Some flow too smoothly, never stopping for air. Others insert artificial breaths that feel robotic, like they’ve been placed there as an afterthought. In some cases, AI will even add the sound of a breath at the start of a sentence but forget to place one later when it’s actually needed.

A real speaker adjusts their breathing depending on the situation. AI tends to follow patterns instead of reacting to the moment.

What to Listen For:

- No breathing at all: the voice keeps going with no natural pauses.

- Breaths that sound unnatural: perfectly spaced or dropped in at odd moments.

- Breaths that don’t match the tone: a voice that sounds calm but breathes like it’s out of breath.

2. Strange Intonation and Rhythm

People don’t speak in a predictable pattern. Some words stretch out for emphasis. Others get rushed. Tone rises and falls depending on the emotion behind a sentence.

AI often gets this wrong. Some voices sound too even, as if every word carries the same weight. Others exaggerate their ups and downs, creating a strange rhythm that feels artificial. In some cases, AI-generated speech develops a “sing-song” effect, where sentences follow the same rise and fall over and over. It doesn’t always sound robotic, but it doesn’t sound human either.

Natural speech is full of variation. AI-generated audio often lacks that balance.

What to Listen For:

- Overly smooth delivery: sentences flow too perfectly, with no variation in speed.

- Repetitive pitch patterns: a rise and fall that feels unnatural or too rhythmic.

- Strange emphasis: random words get stressed in a way that doesn’t fit the sentence.

3. Limited Emotional Depth

AI voices can imitate emotion, but they don’t fully understand it. They can sound happy, sad, or serious, but the delivery often feels hollow. Real voices carry layers of emotion that shift naturally. AI tends to force those emotions in a way that feels artificial.

A real person’s tone changes depending on context. Sarcasm sounds different from genuine excitement. Frustration comes through in small voice inflections, even when someone is trying to hide it. AI struggles with these nuances. Some voices lean too far in one direction, making emotions feel exaggerated. Others fail to adjust at all, leaving everything at the same intensity.

What to Listen For:

- Emotions that feel overdone—happiness that sounds too bright or sadness that sounds theatrical.

- Flat emotional delivery—no variation in tone, even in places where a real voice would shift.

- Unnatural responses to context—serious topics delivered with an upbeat tone, or jokes that sound lifeless.

4. Inconsistent Pronunciation

AI is great at reading text, but that doesn’t mean it understands language. One of the biggest giveaways is pronunciation. Some words sound perfect in one sentence but completely wrong in another.

A real speaker naturally adjusts based on context. AI follows rules but doesn’t always recognize exceptions. This is especially obvious with words that have multiple meanings, like “lead” (the metal) vs. “lead” (to guide). AI might pronounce them correctly in one instance but get them wrong later.

Regional accents and slang also trip up AI. Some tools add fake accents that sound too generic. Others mispronounce brand names, foreign words, or industry jargon. Even when AI gets the pronunciation right, the emphasis might land on the wrong syllable, making the word feel unnatural.

What to Listen For:

- A word that’s pronounced correctly once but wrong later.

- Struggles with slang or names.

- Odd emphasis on syllables, making common words sound unnatural.

5. Subtle Digital Glitches

Even the most advanced AI-generated audio isn’t flawless. Sometimes words sound slightly distorted. A syllable might stretch too long. A sentence might cut off in a way that feels abrupt.

Some AI voices also carry a faint artificial tone, as if there’s something digital lurking underneath. It’s subtle, but if you listen carefully, you’ll hear it. In some cases, AI-generated voices will even change pitch slightly between sentences, as if different segments were stitched together.

What to Listen For:

- Weird digital noise—a slight metallic or robotic undertone.

- Abrupt cut-offs—words that don’t fade naturally at the end of a sentence.

- Pitch inconsistencies—small shifts in tone that feel unintentional.

6. Overly Clean or Artificial Sound Quality

Most human recordings have at least a little background noise. Even in a professional studio, there’s a natural presence—a faint room tone, the subtle resonance of a voice bouncing off walls, or the tiny imperfections in microphone pickup.

AI-generated audio often lacks these organic details. The sound is too clean, too smooth, almost as if it exists in a vacuum. Some AI tools try to simulate microphone effects, but they struggle to recreate the full texture of a real recording. Even when background noise is added artificially, it often sounds flat or generic.

Another giveaway is the way the voice interacts with the environment. A real speaker’s voice shifts based on the space they’re in. A voice recorded in a small room will sound different from one recorded in an open space. AI-generated speech doesn’t always capture these variations.

What to Listen For:

- A voice that sounds too isolated—no background noise, no environmental presence.

- Artificial room effects—microphone enhancements that don’t feel natural.

- A lack of dynamic range—voices that sound flat, even when they’re loud or quiet.

7. Struggles with Fast or Complex Speech

Not everyone speaks at the same pace. Some people talk fast, especially when excited. Others slow down, emphasizing words in a way that adds weight to a sentence. Real speech isn’t perfectly consistent, and that variation makes it feel natural.

AI voices often miss this. Some sound too steady, as if they’re following a script with no adjustments for pacing. Others struggle when a sentence speeds up. If AI-generated speech tries to replicate fast-talking speakers, it may start to blur words together or lose clarity.

Another red flag is the absence of natural stumbles. Humans sometimes hesitate, restart a sentence, or use filler words like “uh” or “um” without thinking. AI tends to skip these entirely, making the speech feel unnaturally smooth.

What to Listen For:

- Speech that maintains a perfect pace, even when it should speed up or slow down.

- Fast sentences that sound unnatural or lose clarity.

- No stumbles, hesitations, or filler words where they would normally appear.

8. Repetitive Speech Patterns

Humans mix things up naturally. Even if someone repeats an idea, they’ll change the way they phrase it. AI often fails at this. When using a text-to-speech generator, some voices fall into predictable patterns, using the same pacing, tone, or sentence structure over and over.

This is especially noticeable in long-form AI-generated content. After a few minutes, the repetition becomes obvious. The voice may stress certain words in the same way, follow a strict rise-and-fall rhythm, or start sentences in a way that feels overly structured.

Some AI voices also reuse the same phrasing. If you listen closely, you might notice identical sentence constructions appearing again and again.

What to Listen For:

- A voice that follows the same pacing in every sentence.

- Identical sentence structures appearing multiple times.

- Repetitive stress on certain words, making the speech feel predictable.

9. Unnatural Accent or Regional Inconsistencies

Accents are complicated. Even in the same region, people don’t always pronounce words the same way. AI often struggles with this. Some voices have an accent that sounds off—too generic, too exaggerated, or inconsistent from one sentence to the next.

Even when an AI-generated voice does a good job with an accent, it may still miss the little details that make it sound real. A natural speaker blends words together in subtle ways. AI often separates them too clearly, making the speech feel artificial.

This problem becomes more noticeable with regional slang or dialect-specific phrases. AI voices can mispronounce common local expressions, or they might read them with the wrong intonation.

What to Listen For:

- An accent that feels too polished or inconsistent.

- Regional phrases that sound awkward or mispronounced.

- Speech that separates words too clearly instead of blending them naturally.

10. Mismatched Tone and Context

A real speaker adjusts their tone based on the situation. A serious topic sounds different from casual conversation. Sarcasm has a different rhythm than genuine enthusiasm. AI often fails to pick up on these shifts, making the speech feel disconnected from its meaning.

One of the biggest giveaways is tone mismatching. An AI-generated voice might sound upbeat while discussing a tragic event or maintain a flat delivery during an exciting moment. Even when AI tries to replicate emotional shifts, it can go too far, making the speech feel exaggerated.

This issue becomes more noticeable in longer content. Over time, a real speaker naturally shifts their energy, adjusting to the conversation or audience. AI voices often struggle to maintain that balance.

What to Listen For:

- A voice that sounds too upbeat or too flat for the topic.

- Lack of natural emotional shifts, making the speech feel mechanical.

- Over-the-top delivery that feels exaggerated instead of genuine.

How to Make Realistic AI Voiceovers with Podcastle

Podcastle’s AI voices are incredibly realistic, making them a powerful tool for creators who need high-quality narration. While AI-generated speech has its tells, Podcastle minimizes those flaws by offering lifelike intonation, smooth pacing, and natural-sounding voices. If you’re looking to create AI voiceovers that sound convincingly human, here’s how to do it.

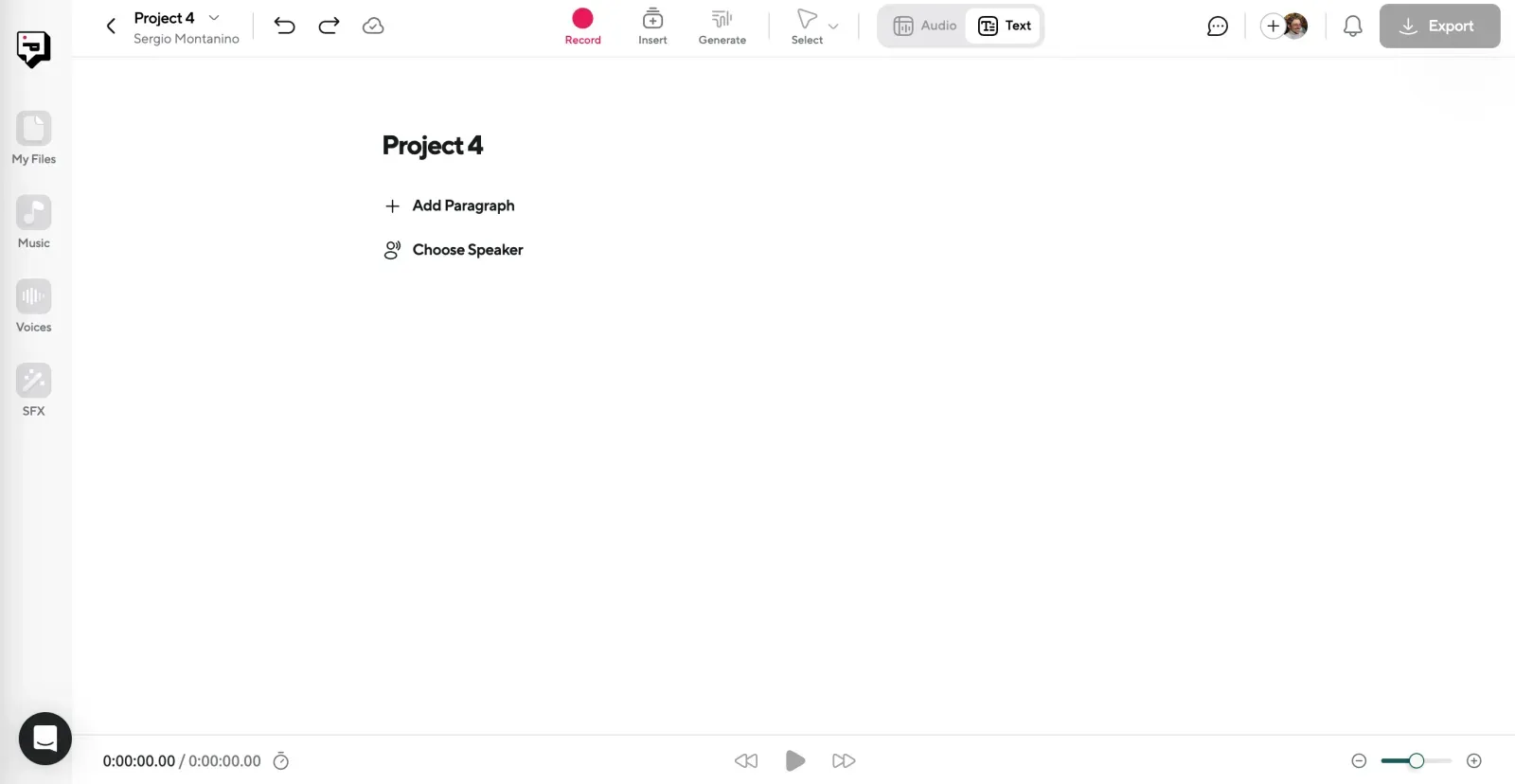

Step 1: Open AI Voices and Start a New Project

Log into Podcastle and go to the AI Voices section. Click Create a Project to start a new voiceover session. This is where you’ll enter your script and select the AI voice that best fits your content.

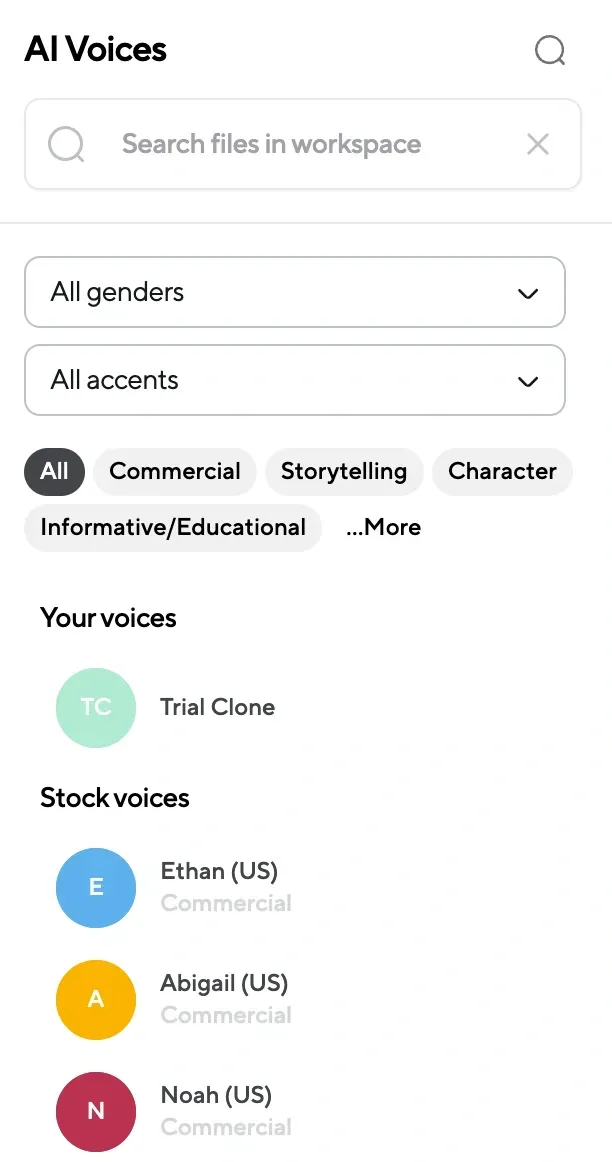

Step 2: Choose a Voice and Add Your Script

Podcastle provides a range of AI voices, each designed to suit different tones and speaking styles. Some sound warm and conversational, while others have a more professional edge. Browse through the options and select a voice that aligns with your project. Once you’ve made your choice, paste or type your script into the editor.

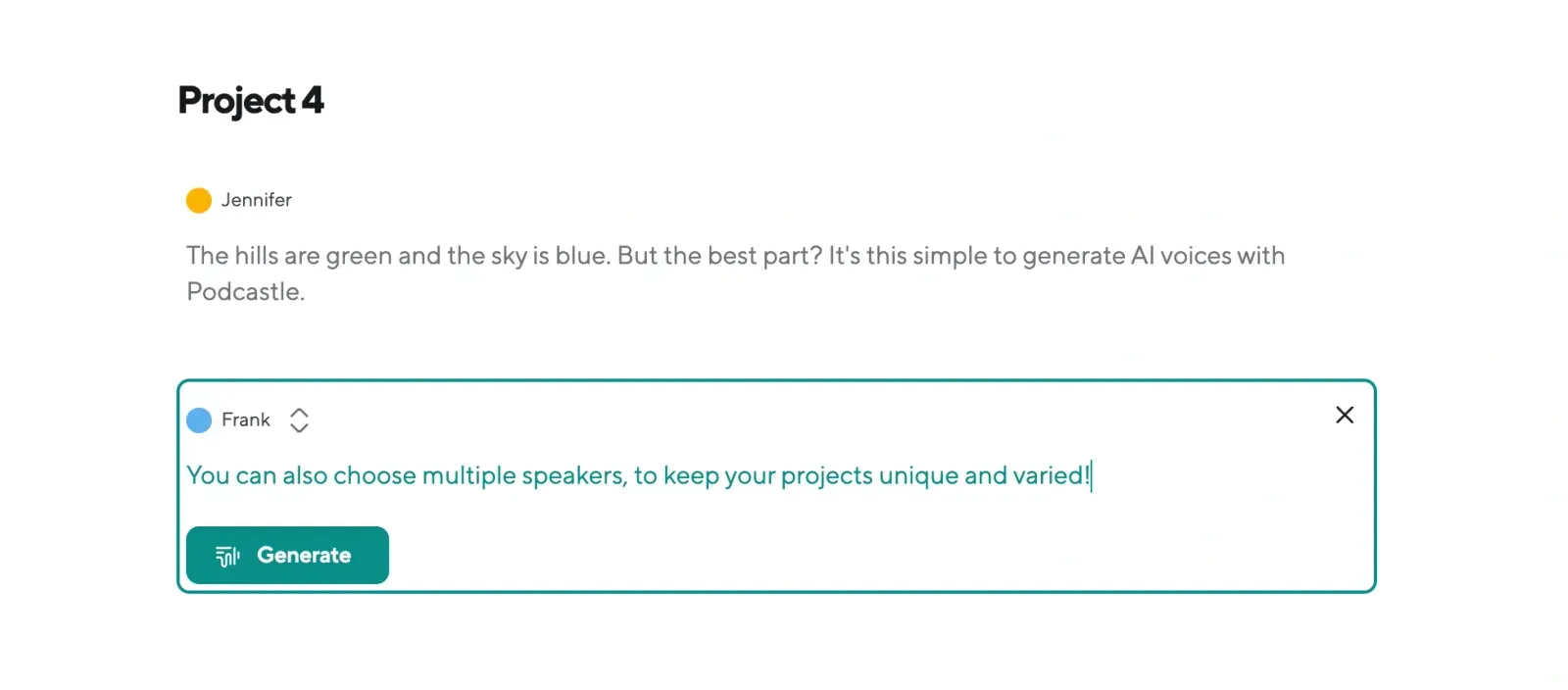

Step 3: Generate Your AI Voiceover

With your script and voice selected, click Generate to produce the voiceover. Podcastle’s AI will process the text, creating a smooth and natural-sounding recording. The result will be a voice that flows realistically, avoiding the stiff or robotic cadence found in lower-quality AI speech.

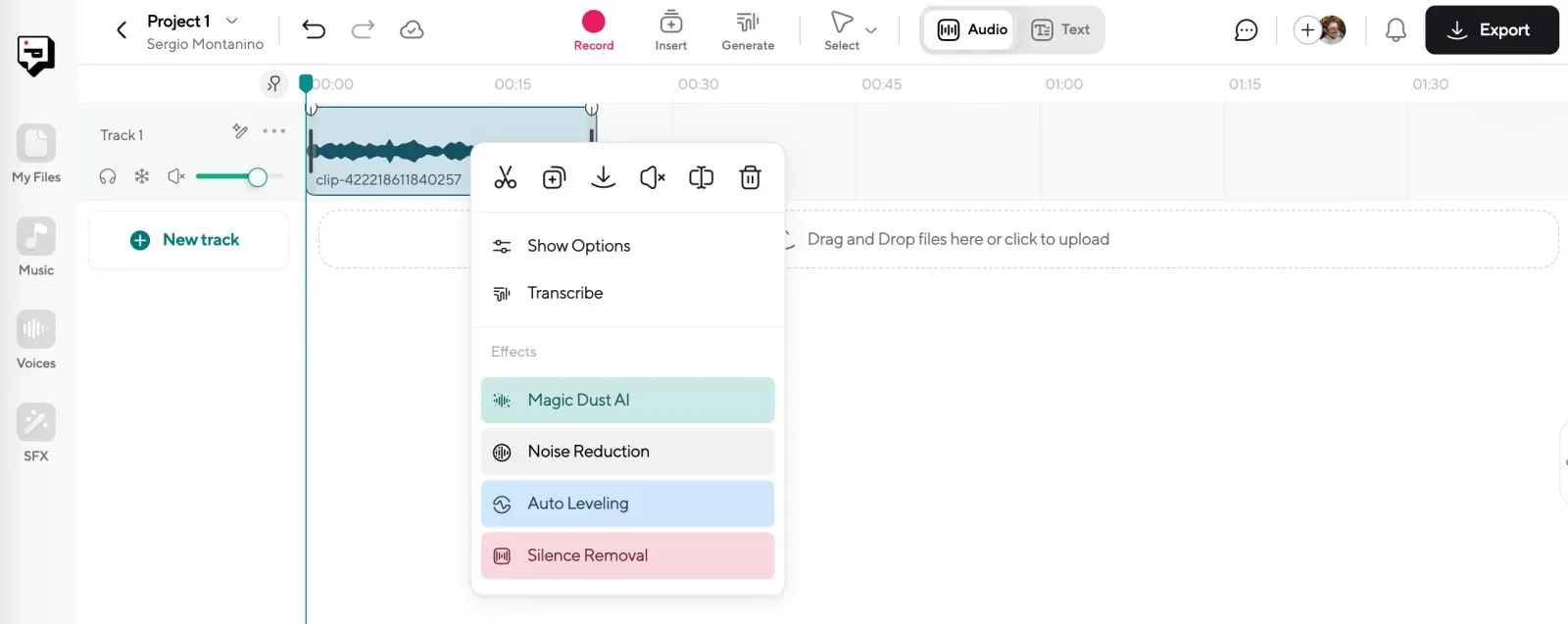

Step 4: Fine-Tune the Audio for a More Human Feel

Even the best AI-generated voices can benefit from subtle refinements. Podcastle includes tools like Magic Dust AI, noise reduction, and auto-leveling, which help polish the audio and make it feel even more organic. Adjust the pacing, tweak pronunciation, and ensure the delivery sounds as natural as possible. Once you’re satisfied, export your final voiceover in your preferred format.

The Clues Are There

AI-generated voices have come a long way. The best ones sound smooth, expressive, and impressively human. With tools like Podcastle, anyone can create AI voiceovers that feel natural enough to blend into real conversations. But no matter how advanced the technology gets, there will always be subtle signs that separate AI from a real voice.