Have you ever wished you could record a podcast or an audiobook without actually having to record it? Well, with the voice synthesis technology evolving so quickly, you actually can do it.

But what is voice synthesis really and what is the logic behind it? Let’s break it down, starting with the basics.

Psst... Want to Listen to This Blog?

Prefer listening over reading? Hit play below to hear this blog, brought to life using our AI Voices:

The Basics of Voice Synthesis

At its core, voice synthesis is the process of using AI to generate human-like speech from written text. It’s a form of speech synthesis, where computers analyze patterns in real voices to mimic the way humans speak. This means that rather than relying on prerecorded voice clips, AI can now generate speech on the fly, making it sound incredibly natural and lifelike.

In simple terms, think of voice synthesis as a tool that takes written words and transforms them into spoken language. The AI doesn’t just read the text aloud, it recreates the tone, rhythm, and inflections of human speech, making it sound like a real person is talking.

The Evolution of Voice Synthesis: From Robotic to Realistic

When voice synthesis first started, the results were far from lifelike. Early speech synthesis systems produced robotic, monotone voices that were hard to listen to and lacked any real emotion. Imagine the early days of computer-generated voices—basic and unnatural.

Fast forward to today, and the story has completely changed. Advances in AI and machine learning have allowed synthetic speech to become remarkably more sophisticated. These AI voices now come with the ability to replicate the nuances of human speech, such as tone variation, pauses, and even emotion. They can mimic a wide range of accents, speech patterns, and vocal qualities, making them sound more like real people than ever before.

So, how did we get from robotic voices to the advanced AI voices we have today?

- Data and Algorithms: Early speech synthesis relied on simple algorithms and pre-recorded voice samples. As AI technology progressed, machine learning models started analyzing vast amounts of data—real human voices—to learn the patterns of speech. By understanding how words are pronounced, intonations, and speech rhythms, AI has been able to improve voice synthesis, resulting in more natural-sounding voices.

- Deep Learning: One of the key breakthroughs in speech synthesis came with deep learning technology. This technique allows AI to learn complex patterns in data, improving the quality and naturalness of the speech it generates. As deep learning models continue to improve, we can expect even more lifelike and expressive voices in the future.

Voice Synthesis and Content Creation

Now that we understand what voice synthesis is and how it works, let's explore how this technology is changing the way we create content. Whether you're a podcaster, YouTuber, or content creator of any kind, voice synthesizers are opening up new possibilities for high-quality audio production without needing to record your own voice.

Here are a few ways voice synthesis is revolutionizing content creation:

1. Creating High-Quality Voice-Overs Without Recording

For many content creators, recording voice-overs can be time-consuming and even challenging. This is where AI voice synthesis becomes a game-changer. Instead of spending hours recording the perfect voice-over or relying on expensive voice actors, creators can use synthetic speech to generate professional-sounding voice recordings with just a few clicks.

With AI, you can choose from a variety of voices that suit your content’s tone and style—whether it's a friendly, conversational tone for YouTube videos or a more formal one for educational content. This not only saves time but also offers flexibility for creators who may not be comfortable recording their own voices or those with limited access to professional recording equipment.

2. Repurposing Written Content into Audio

As the demand for audio content continues to rise, turning written material into audio has become a practical solution for reaching wider audiences. Blog posts, articles, eBooks, and other written content can be easily transformed into audio using voice synthesis.

For instance, if you’ve written an in-depth article, you can simply input it into a voice synthesizer and have it read aloud in a natural-sounding voice. This makes your content more accessible to people who prefer listening over reading or those with visual impairments. It also allows you to repurpose existing content and give it new life in the form of podcasts, audiobooks, or narrated articles.

3. Narrating Videos with Ease

Whether you’re creating explainer videos, tutorials, or promotional content, narration plays a key role in engaging your audience. Thanks to voice synthesis, it’s never been easier to add voice-overs to videos without spending time in front of a microphone.

AI-powered synthetic speech can be integrated into video production workflows, allowing creators to generate realistic narration that matches the pace and mood of their visuals. If you’re looking to create educational content, for example, you can use AI to narrate lessons, making them more engaging and interactive for your viewers.

4. Personalizing Audio Content

For creators looking to add a unique touch to their audio content, voice synthesis offers the possibility to create personalized voices. Some AI tools even allow creators to clone their own voices or adjust tone, pitch, and speed to suit their preferences. This means you can ensure your audio content sounds exactly how you want it to, without needing to constantly record new material.

Practical Applications Beyond Content Creation

While voice synthesis is primarily transforming the content creation space, it’s also being used in many other industries. Here are just a few examples of how synthetic speech is making an impact:

- Virtual Assistants: Siri, Alexa, and Google Assistant are prime examples of how voice synthesis is being used to power virtual assistants. These systems rely on AI to generate responses in real time, making them incredibly useful in everyday life.

- Customer Service: Many businesses are now using AI-driven voices to handle customer inquiries through automated phone systems or chatbots. These synthetic voices can respond to questions, provide information, and guide customers through various processes without human intervention.

- Audiobooks and Podcasts: The rise of audiobooks and podcasts has led to increased demand for AI-generated narration. Companies are now using AI voice synthesis to produce audio versions of books, articles, and reports quickly and affordably.

How to create AI voices with Podcastle

If you’ve ever wanted to create high-quality voiceovers without recording a single word, Podcastle’s AI Voices has you covered. Thanks to advanced generative AI, you can turn any script into natural-sounding speech in seconds. Whether you’re producing a podcast, narrating a video, or adding voiceovers to your content, Podcastle makes it easy to generate professional audio with just a few clicks.

Getting started with AI voice synthesis is simple. All it takes is five easy steps:

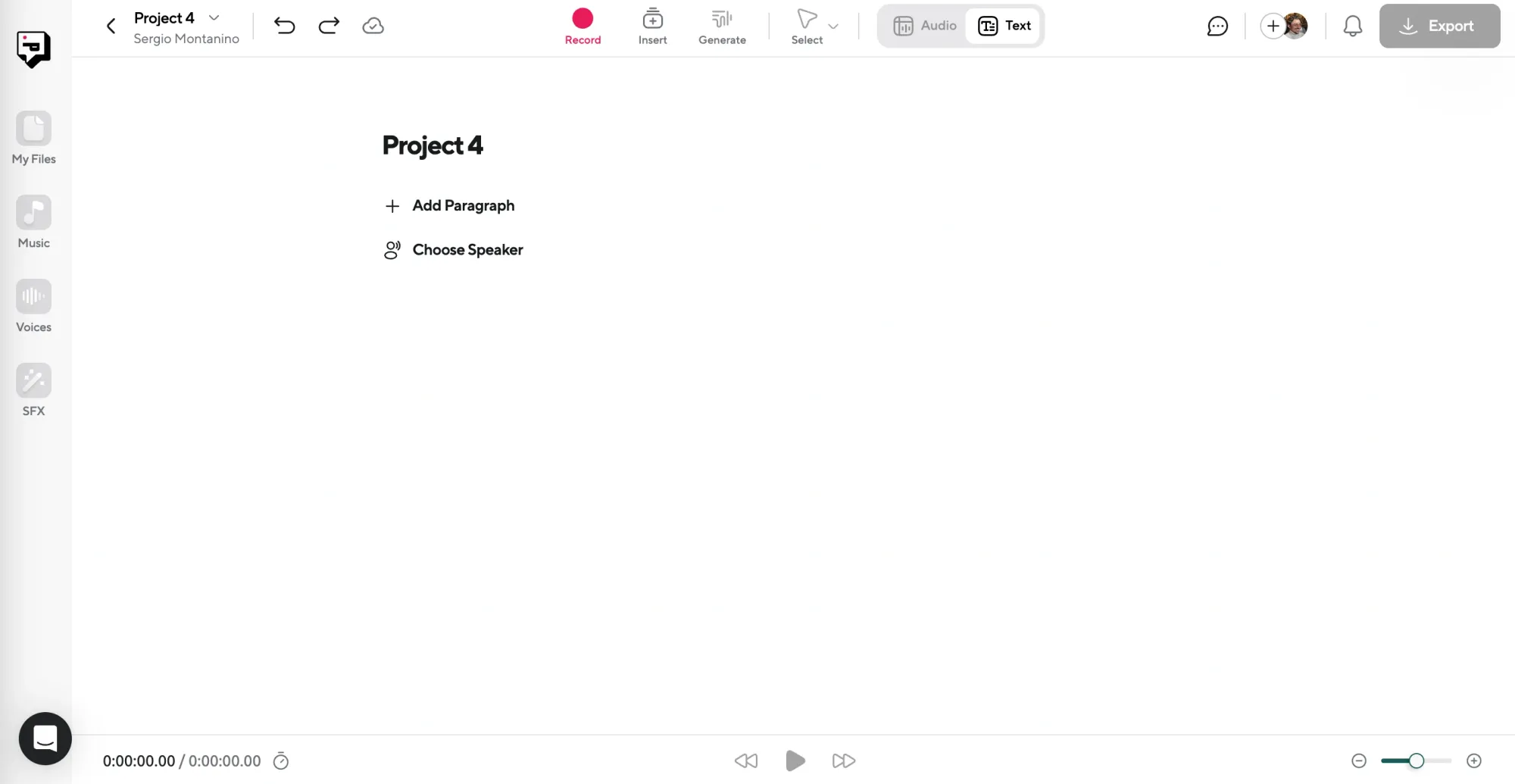

1. Start a New AI Voice Project

Log into Podcastle and navigate to the AI Voices section. Click “Create a Project” to start a new voiceover session. This workspace allows you to input your script, preview different voices, and make adjustments before finalizing your audio.

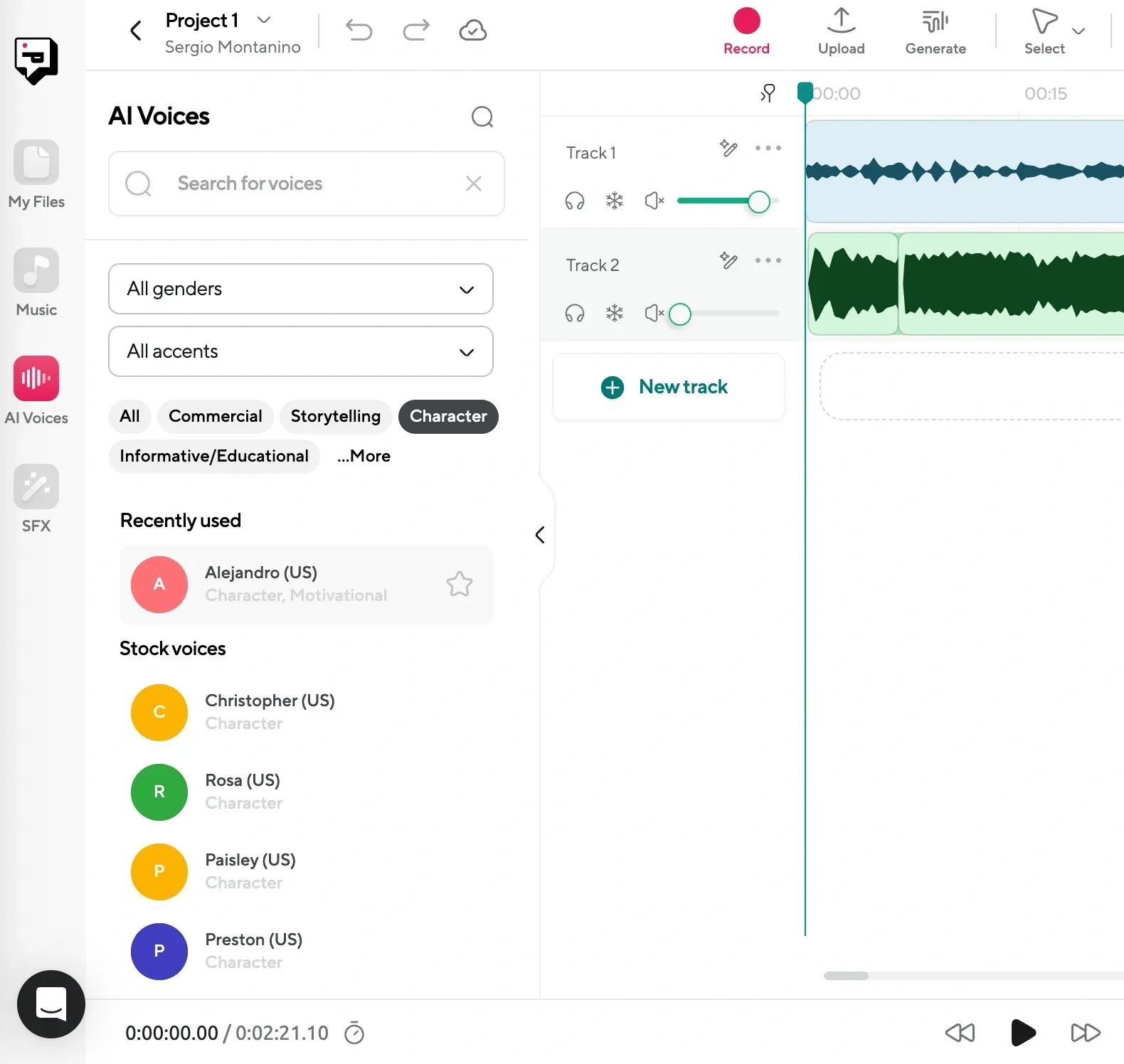

2. Choose an AI Voice & Add Your Script

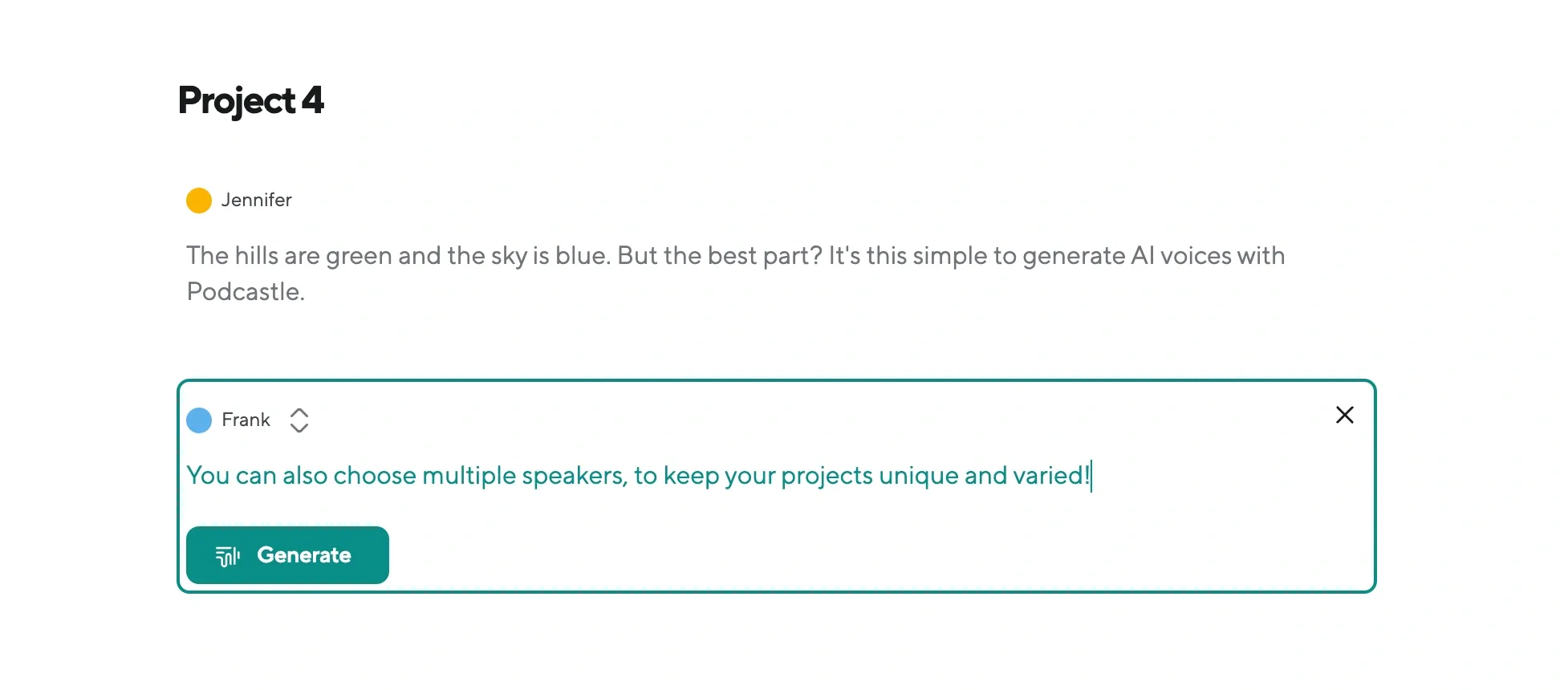

Podcastle offers a diverse selection of AI voices with different accents, tones, and speaking styles. Some sound warm and conversational, while others have a more polished, professional quality. The right voice can shape how your audience perceives the content. After selecting a voice, paste your script directly into the editor.

3. Generate the AI Voiceover

Click “Generate” to turn your text into speech instantly. Podcastle’s AI processes the script and produces an initial narration. This first version provides a strong foundation, but you can fine-tune it for a more natural flow.

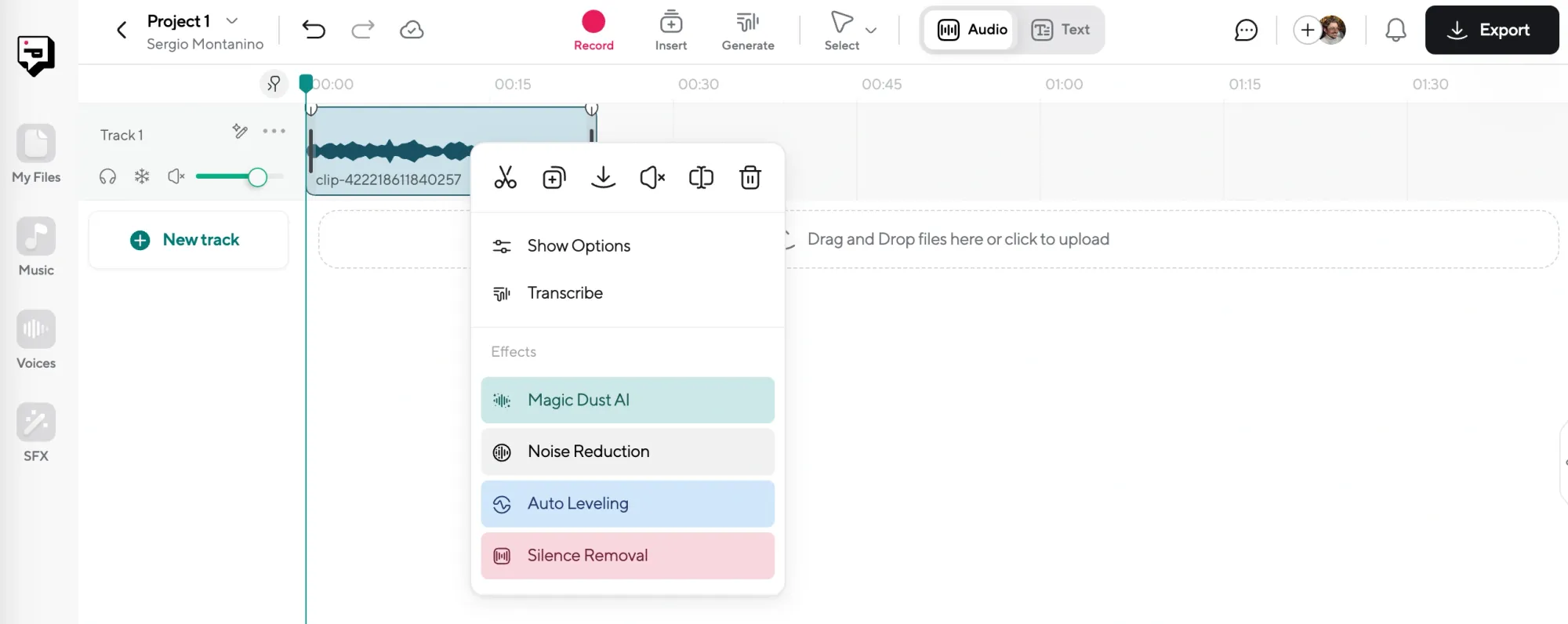

4. Edit & Enhance for a Realistic Sound

Even AI-generated voices can benefit from small refinements. Podcastle’s editing tools let you adjust pacing, tweak pronunciation, and refine intonation to make the speech sound smoother. Features like Magic Dust AI enhance clarity, while noise reduction removes unwanted artifacts. Small text edits—such as adding punctuation or restructuring sentences—can also improve the delivery.

5. Export Your Final Audio

Once the voiceover sounds polished, export it in MP3 or WAV format for easy publishing. At this stage, you can also add background music, sound effects, or an intro/outro to elevate the listening experience.

Tips for Choosing the Right AI Voice for Your Content

Selecting the right AI voice can make a huge difference in how your content is received. Here are some tips for choosing the best voice for different types of content:

For Tutorials and Educational Content

Choose a clear and neutral tone that’s easy to follow. A calm, steady pace works well for complex explanations. Avoid voices that are too fast or exaggerated—clarity is key.

For Social Media Voice-Overs

For quick, engaging content, go for a friendly, upbeat voice with a moderate speed. Social media is all about capturing attention quickly, so pick a voice that matches the energy of your content. A touch of enthusiasm can make your message stand out.

For Narration and Audiobooks

Opt for a more natural-sounding voice with some depth. A slightly slower delivery with appropriate pauses will help the listener stay engaged, especially for audiobook or similar long-form content. A voice with warmth and character is ideal for keeping attention during long narrations.

Ready to Try AI Voice Synthesis?

If you're looking to take your content to the next level with realistic, engaging AI voices, it’s easier than ever to get started. Whether you're creating tutorials, narrating stories, or adding voiceovers to your videos, AI-generated voices can save you time and effort while still delivering high-quality results. Explore the possibilities and see how Podcastle’s AI voices can work for you—no recording studio required.