If there’s one advantage we have at Podcastle, it’s proximity: we get to watch thousands of creators work.

And in 2025, a clear pattern emerged. The creators who consistently produced the best results Were the ones who had mastered a few simple, repeatable ways to edit audios that made their content unmistakably better.

Across interviews, podcasts, tutorials, courses, and video episodes, we kept noticing the same habits:

• Mixing for real environments, not for studio headphones

• Editing the transcript first, then the sound

• Cleaning just enough, without removing human texture

• Shaping the pace to hold attention through every segment

• Preserving emotional beats so conversations feel alive

• Using AI to remove friction, not personality

None of this was about perfection. It was all about small, deliberate choices that made a huge difference in how listeners experienced their work.

So instead of giving you theory or generic tips, we’re sharing the actual editing behaviors we saw from top creators inside Podcastle.

These are the patterns they relied on, and the choices that helped them edit audios faster, cleaner, and with far more intention.

6 patterns we saw in how creators edit audios in 2025

1. Creators stopped mixing for headphones, and started mixing for everything

Creators now have to publish audio that has to survive phone speakers, cheap earbuds, car Bluetooth, smart TVs, and YouTube living rooms.

YouTube alone reported 1B+ monthly viewers of podcast content (and 400M+ hours watched monthly on living-room devices), which basically forces audio to be consistent in way more playback situations.

That’s why loudness consistency became the silent retention lever: people forgive a mediocre mic faster than they forgive volume jumps.

Two things can be true at once:

• Your mix can sound “fine” in your headphones.

• It can still feel annoying in the real world if voices drift between sentences, speakers, or sections.

Most big platforms now normalize playback loudness. Spotify is very explicit about their target: -14 LUFS (Normal), and they apply different levels for Loud/Quiet too. They also call out a practical detail creators miss: they keep headroom for lossy encodes and treat True Peak as a safety limit to avoid distortion after encoding.

The standard behind the curtain (why LUFS isn’t a vibe, it’s math)

When people say “LUFS,” the measurement is grounded in ITU-R BS.1770, which defines how integrated loudness and true peak are measured.

Broadcast and streaming practices also commonly reference EBU’s loudness framework (R128).

What we saw creators do (the ‘new normal’ workflow)

Instead of “mix until it sounds good,” this is what the best of our creators did in 2025:

1. Mix for intelligibility first (voice sits forward; music doesn’t fight it)

2. Then measure and normalize (so it travels across platforms)

3. Then sanity-check on at least two devices (phone speaker + earbuds)

So the question is,

How to level audio volume automatically for spoken voice?

The beauty of AI is that it makes your workflow cleaner. Our creators fixed the loudness problem, simply by using Podcastle’s Auto-Leveling tool, instead of spending hours fixing the compressors or loudness meters.

It became the quickest way to avoid the #1 listener complaint: sudden loudness jumps.

How they did it (tiny workflow):

1. Upload or record the track

2. Open the AI Assistant (sparkle icon)

3. Apply Auto-Leveling

4. Export a consistently balanced episode, no manual mixing needed

This gave creators “broadcast-ready” dialogue without touching any engineering tools.

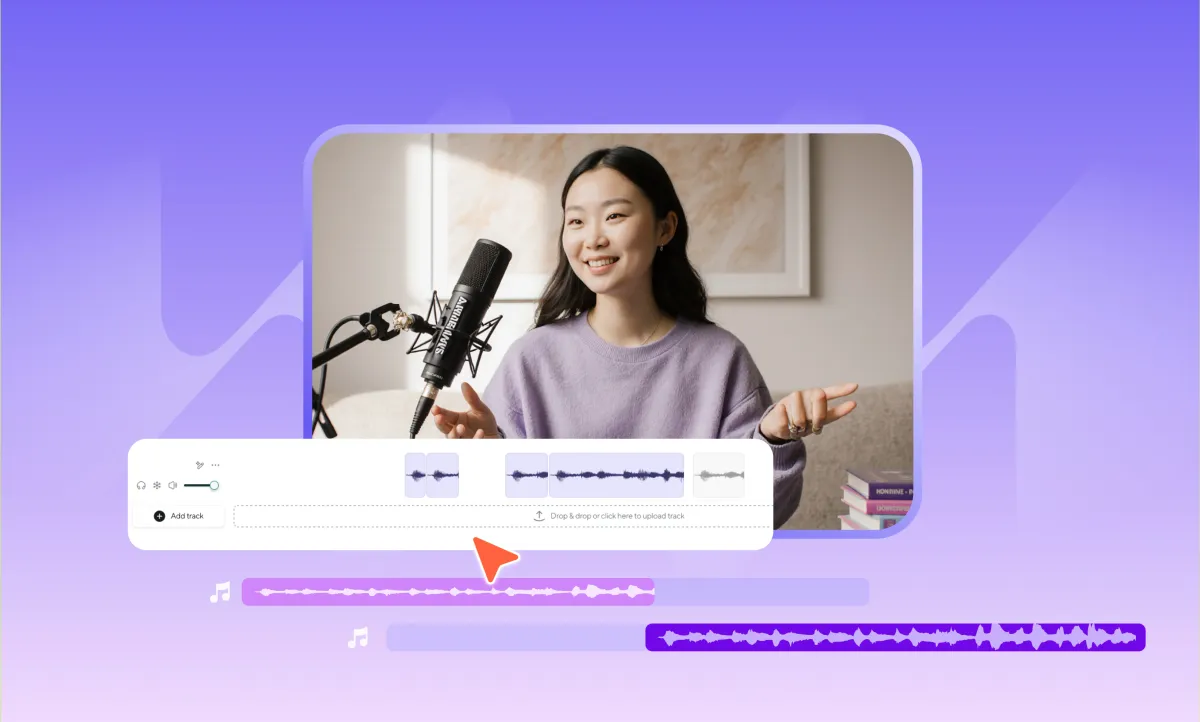

2. “Cut by text” became the default first pass

The real shift is that the timeline became a document.

In 2025, a lot of creators stopped “editing audio” first, they started editing meaning first. The mental model flipped:

You first delete the sentence, then the waveform follows.

That’s the core of an AI transcription-based audio editing workflow (cut by text): you shape the story in language, then polish sound after.

Why this won (even for skilled editors)

Text-first editing solves three pain points at once:

1. Speed: you can cut minutes of ramble in seconds by deleting lines

2. Searchability: find the one moment you need by searching words, not scrubbing audio

3. Structure: you can see repetition, weak intros, tangent sections like a draft

What we observed from creators working in Podcastle

Across thousands of editing sessions, we saw the same patterns emerge:

• Creators did their structural edits almost entirely in text. They skimmed, highlighted, and deleted like they were editing a Google Doc. Repetition? Gone. Rambling intros? Tightened in seconds.

• They used the transcript to find moments instantly. Instead of scrubbing the waveform, they searched for words, phrases, or topics , especially useful for long interviews and narrative shows.

• They trusted transcript editing to protect the natural voice. Because they weren’t zooming into waveforms and over-cutting breaths, they preserved human pacing and avoided robotic-sounding joins.

• The transcript became the creative step; the audio panel became the finishing step. This separated “shaping the story” from “polishing the sound,” which made creators far more confident and far more efficient.

Tiny checklist: avoid the 3 classic text-edit mistakes

• Don’t delete every “um” → delete clusters that slow meaning

• Don’t hard-cut every pause → keep pauses that signal thought/emphasis

• After big deletions, always do a quick listen for cadence and tone continuity

3. Creators learned that “too clean” can sound worse than noisy

The funny thing about noise reduction in 2025 is that creators weren’t fighting noise anymore, they were fighting over-cleaning.

The rise of AI tools made it incredibly easy to remove hiss, hum, and background distractions, but it also exposed a bigger challenge:

• You don’t lose listeners because of noise.You lose them because over-cleaning makes voices sound fake.

Across thousands of sessions, creators ran into the same artifact patterns:

• Metallic chirps when denoise is pushed too far

• Gating that chops natural breaths

• Reverb shadows that get louder as noise gets quieter

• Speech texture loss, especially in soft-spoken voices

And the problem wasn’t that the tools were bad, it was that most people didn’t know when to stop.

How to edit audios without sounding over-edited with Podcastle

We noticed a very consistent behavior among successful creators using Podcastle’s cleanup tools:

• They treated noise reduction as a “dose,” not a fix-all.

They removed just enough distraction for clarity, but never enough to sterilize the voice.

• They ran cleanup after structural edits.

Fix noise once, on the final timeline, not 20 times after every cut.

• They prioritized “presence” over “perfection.”

A clear but natural voice always outperformed a perfectly silent, robotically-cleaned one.

The practical rule our creators followed

Clean the environment, not the person.

Listeners can tolerate a fan, a hum, a slight room sound, but they won’t tolerate a voice that doesn’t sound human anymore.

Tiny guideline:

• Light cleanup → great

• Mid cleanup → great

• Heavy cleanup → probably too far

AI made noise reduction easy…but in 2025, the real skill was knowing when to leave the human texture intact.

4. Editing remote conversations became its own craft

2025 was the year remote conversations finally became normal for every creator, podcasters, course makers, interviewers, YouTubers, journalists, educators.

But with that came a new editing reality:

The biggest audio problem wasn’t mic quality, but the room the guest recorded in.

We consistently saw the same issues:

• One speaker in a treated room

• Another in a kitchen

• Another in a glass office

• Another on a laptop mic facing a wall

Even with good software, you can’t fully “un-ring” room echo. And that mismatch made conversations feel stitched together, even when the content was great.

Audio editing workflow for remote interviews and guests

The best creators solved this in two places: before recording and after.

Before recording (the guest checklist they reused constantly):

• Sit close to the mic

• Face a soft surface (curtains, couch, bed)

• Avoid hard rooms (tile, glass, kitchen)

• Turn off fans/AC if possible

These tiny adjustments changed more than any plug-in could.

After recording (the Podcastle workflow):

• Volume-level both speakers so neither dominates

• Use subtle EQ to make voices “sit” in the same perceived space

• Keep some room tone under transitions so the conversation feels continuous

The insight creators kept repeating

“Good audio is something you prevent, not something you fix.”

Remote recording made creators rethink their entire pipeline. The ones who adapted spent less time editing because they learned how much of the problem could be solved before the file ever hit the timeline.

5. Creators started editing the pace of a conversation

In 2025, pacing became a creative tool.

This shift came from a simple metric change: platforms like YouTube and TikTok now rank retention curves heavily. A too-slow segment can cause a dip, and a dip can ruin the whole episode’s performance.

So creators edited for flow, not flaw.

Here’s what they consistently did inside Podcastle:

Micro-tightening: They removed tiny gaps (0.1–0.2s) to keep dialogue feeling alive without rushing it.

Intentional pauses: They kept pauses before insight moments or the answers to important questions, because it helped emphasize an idea and hold listeners.

Momentum arcs: In interviews, they boosted the pace in the middle, the area where listener drop-off usually begins.

“Scan listening” edits: Creators shortened intros drastically because they knew listeners decide in the first 15–20 seconds whether to stay.

This year was all about creators recognizing that the way you edit audios changes how people experience a conversation, not just how they hear it.

6. Editing for emotional clarity became the new norm

Directly connected to what we discussed above, we’ve also noticed that when working with guests, co-hosts, or narrative scripts, creators began editing for emotional clarity

We saw this play out in three specific ways:

• Preserving reactions: Creators stopped deleting overlapping “yeahs,” soft laughs, and quick affirmations. They realized these micro-responses made remote conversations feel in-person.

• Re-timing energy: If a co-host’s reaction lagged due to remote latency, creators nudged it earlier to match the speaker’s emotional beat. It made conversations feel tighter and more human.

• Highlighting emotional “anchors”: Many creators boosted or isolated a single sentence in a long discussion, the emotional thesis, and built the pacing around it.

Podcastle storytelling-editing easier, with transcripts and multi-track alignment. Our creators could edit audios while keeping the emotional contour intact, or even amplifying it.

It’s the kind of nuance that was once only possible in high-end studios. Now creators do it from a laptop.

Creator audio editing trends in 2026

Based on the patterns we’re already seeing across thousands of our projects, here are the creator audio editing trends that will define 2026.

1. AI will handle the “first 80%,” creators will focus on the last 20% that makes it human

The trend is unmistakable: creators no longer want to babysit EQ curves or manually diagnose noise issues. They want to open a project and see the fundamentals already done—cleaned, leveled, transcribed, aligned. In 2026, AI will increasingly provide a pre-edited baseline: usable pacing, balanced loudness, reduced noise, and a transcript that’s already structured. Creators will jump straight into shaping story, emotion, and flow instead of engineering.

2. Editing will shift from “fixing” to “directing”

The best creators are already thinking like directors, not technicians. In 2026, this becomes the norm. Edits will focus on narrative arcs, clarity of ideas, and emotional moments, while AI quietly handles the technical layer underneath. The question will shift from “How do I fix this?” to “Does this moment land?” Tools will surface pacing suggestions, spotlight emotional beats, and even propose cuts that improve retention.

3. Room simulation will replace harsh dereverb

One of the hardest problems in audio, bad room tone from guests, won’t be solved by dereverb alone. Instead, 2026 tools will reconstruct or simulate a more natural shared environment, making remote guests sound like they were recorded in the same space. Instead of trying to erase the room, AI will learn to rewrite it. Early versions of this are already appearing, and creators are hungry for it.

4. Pacing analytics will become as common as loudness analytics

2025 showed that creators edit timing intentionally. In 2026, editing tools will start surfacing pacing insights automatically: identifying long stretches of low energy, highlighting repetitive segments, and flagging moments where listener drop-off is likely. Think of it as a retention meter for spoken word. Instead of guessing, creators will have actual data behind their pacing decisions.

5. Transcript-first editing will become the default across every format

Not just podcasts. Everything: interviews, explainers, video essays, educational content, audio-first video. In 2026, creators will start projects assuming they’ll edit audios through text first. It will feel unnatural to scrub a timeline to find a moment when you can simply search it. Transcripts will evolve beyond being a representation of the audio, they’ll become the canvas.

6. Personalization will replace presets

Creators won’t want generic “voice cleanup” buttons anymore, they’ll want tools that know their voice, their mic, their environment, and their preferred style. Expect voice profiles, noise fingerprints, pacing signatures, and style presets that adapt to each creator over time. Tools will learn the difference between “your natural breaths” and “unwanted noise,” between “your conversational pace” and “accidental drift.”

7. Editing will increasingly blend across formats

Creators won’t think in “podcast,” “video,” or “course” buckets anymore. Tools will treat all spoken content the same and optimize for where it's going: social cutdowns, TikTok hooks, YouTube chapters, line-by-line scripts. In 2026, editing becomes platform-aware—and your project will be shaped differently depending on whether it’s headed to headphones, speakers, shorts, or YouTube living rooms.

How to apply this: a simple workflow to edit audios like our best creators

Patterns are useful. But they become powerful when they turn into a repeatable workflow you can run on every project.

Here’s the practical blueprint we saw again and again from creators who consistently shipped great work inside Podcastle.

Step 1: Start with a clean enough recording

The best creators don’t rely on editing to rescue a bad setup. Before they even hit record, they

• Sit closer to the mic than feels natural

• Point themselves toward something soft (curtains, bed, couch, clothes rack)

• Avoid hard, reflective rooms whenever possible

This doesn’t make it “studio perfect.” It just makes the edit forgiving. When you edit audios that started reasonably clean, every tool you apply works better.

Step 2: Edit the transcript, not the waveform

Once the recording is done, their first move isn’t EQ or compression—it’s structure.

They open the transcript and:

• Cut repetition and rambling intros

• Move sections so the strongest ideas land earlier

• Delete questions that don’t go anywhere

You’re deciding what the episode is, before you worry about how it sounds. When you edit audios this way, you avoid spending time polishing segments that should’ve been cut entirely.

Step 3: Shape the pace, not just the words

With the structure locked, they zoom into timing:

• Tighten micro-gaps so dialogue feels alive

• Keep intentional pauses before important lines or reveals

• Shorten slow, meandering sections where retention usually dips

This is where you’re editing for how it feels in real time, not just how it reads. You’re designing the listener’s experience minute by minute.

Step 4: Clean just enough, never too much

Only now do they bring in cleanup:

• Light noise reduction to remove obvious distractions

• No aggressive settings that flatten the voice

• A check-in listen on cheap earbuds or a phone speaker

The goal is clarity with texture. If you can still hear that it’s a real human in a real room, you’re in the right zone.

Step 5: Level once, for every place your audio will live

Finally, they normalize loudness so the episode travels well:

• Use Auto-Leveling (or another loudness tool) as a single, global step

• Make sure different speakers feel even in volume and presence

• Export with a preset that matches where the audio is going (podcast, video, social)

This is the moment where your project stops being “a recording on your timeline” and becomes something ready for headphones, cars, smart TVs, and everything in between.

It’s also the point where a lot of listeners quietly decide whether your work feels “professional” or not.

Step 6: Do one “human pass” at the end

The last step our best creators never skip is simple:

• Hit play.

• Experience it like a listener.

• Ask: Would I stay? Would I share this?

That final, honest pass is where you catch the tiny things no meter can see, and where the way you edit audios turns into the way you earn attention.

FAQs

How do I edit an audio?

Start by shaping the story before the sound. Begin with a transcript (if available) and remove repetition, dead starts, and tangents. Then adjust pacing by tightening small pauses and keeping the ones that add meaning. Once the structure feels right, run light cleanup (noise reduction + leveling) and do a final listen on a phone speaker to make sure it plays well across real environments.

How to edit audio to avoid copyright?

Use only the material you have rights to, your own recordings, properly licensed music, or royalty-free libraries. Avoid sampling copyrighted songs, sound effects, or clips unless you have explicit permission. When in doubt, use tools that provide built-in royalty-free options. If you're editing interviews, ensure that guests consent to having their audio used and distributed.

What is the best app for editing audio?

There’s no single “best,” but the best tool is the one that supports how modern creators actually work: transcript-based editing, AI cleanup, one-click leveling, and easy exporting. Podcastle, for example, lets you edit by deleting text, clean up voice with AI, and instantly normalize loudness, making it ideal for podcasts, interviews, and spoken-word content without needing a full studio setup.

How to remove background noise from recordings without artifacts?

Use gentle noise reduction instead of heavy cleanup. Start with a light pass to remove constant distractions (hiss, hum, fans), then stop before the voice begins sounding metallic or robotic. Always clean after your structural edits, not before. A good rule: if the voice loses warmth or becomes too smooth, you’ve gone too far.

How to make voice audio sound professional without a studio?

Record close to the mic, face something soft (curtains, pillows, clothes), and avoid hard reflective rooms. Then, enhance the voice with subtle AI cleanup, mild EQ, and automatic leveling. Many creators get “studio-like” results from a bedroom setup simply by controlling reflections and keeping the voice consistent in volume and distance.

How to remove filler words and long pauses from audio?

Use a transcript-first workflow. Delete clusters of “ums,” “uhs,” and repeated phrases directly from the text. For pacing, tighten long gaps but keep intentional thinking pauses—they often make the conversation feel more natural. Avoid cutting every breath or silence, as this can make the edit feel robotic.

How to fix echo and room reverb in creator recordings?

You can reduce mild reverb with cleanup tools, but severe echo is hard to undo. The most effective approach is preventative: record closer to the mic, place soft materials around the recording space, and avoid reflective surfaces. After recording, use appropriate tools sparingly and level the speakers so they feel like they’re in the same space. A little room tone under transitions can also make the conversation feel more cohesive.